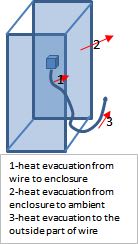

My basic understanding is that the higher the ambient temperature in a device, the lower the current capacity of a wire. So, the current capacity has to get de-rated by a certain percentage based of how high the temperature is. That is as far as I get.

But, how do you figure out how much the elevated temperature impacts the wire? A lot of internet searching gave me a lot of non-conclusive results.

Here's my set up:

Our device will pull about 13 Amps Max continuously.

The conductors inside of the unit that carry full current are all 16 AWG (105'C Jacket).

1 - 16awg wire should be able to handle 22 Amps, Correct?

2 - Temperature inside of the unit can reach approximately 65'C. Does that de-rate that current carrying capacity? By how much?

3 - If we are borderline with our ratings, could a missed strand in a crimp, or a loose crimp put us on over the limit?

(I'm a Mech E, so please don't get too techincal in your answers)

Thanks.

But, how do you figure out how much the elevated temperature impacts the wire? A lot of internet searching gave me a lot of non-conclusive results.

Here's my set up:

Our device will pull about 13 Amps Max continuously.

The conductors inside of the unit that carry full current are all 16 AWG (105'C Jacket).

1 - 16awg wire should be able to handle 22 Amps, Correct?

2 - Temperature inside of the unit can reach approximately 65'C. Does that de-rate that current carrying capacity? By how much?

3 - If we are borderline with our ratings, could a missed strand in a crimp, or a loose crimp put us on over the limit?

(I'm a Mech E, so please don't get too techincal in your answers)

Thanks.