GD_P

Structural

- Apr 6, 2018

- 128

Hello community,

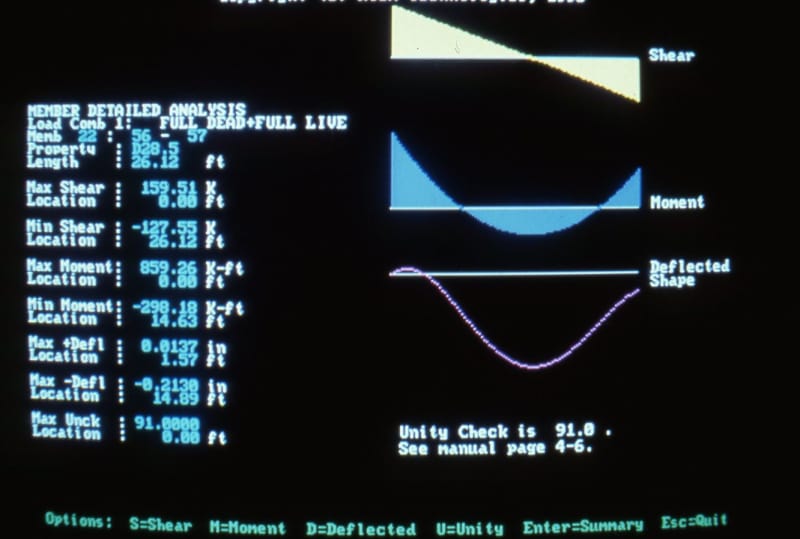

I have question regarding the validation of structure analysis and design software.

As almost all of us use software for structure design.

Do you validate them and up to what extent?

1) Analysis

2) design as per the applicable code

3) Applicability of points 1 & 2 for:

a) Only for simple members i.e., one beam and one column OR

b) 2D / 3D frames with columns, beams, bracings & no of storeys etc.

4) Other tools such as response spectrum analysis, wind load generation tools, time history analysis etc.

I think the validation shall cover all aspects such as mentioned in 1 to 4,

otherwise if analysis is wrong but design is correct, such validation doesn't make any sense.

We use STAAD Pro and thinking to validate it. I just want to know how other engineers handle this?

Any help would be appreciated.

GD_P

I have question regarding the validation of structure analysis and design software.

As almost all of us use software for structure design.

Do you validate them and up to what extent?

1) Analysis

2) design as per the applicable code

3) Applicability of points 1 & 2 for:

a) Only for simple members i.e., one beam and one column OR

b) 2D / 3D frames with columns, beams, bracings & no of storeys etc.

4) Other tools such as response spectrum analysis, wind load generation tools, time history analysis etc.

I think the validation shall cover all aspects such as mentioned in 1 to 4,

otherwise if analysis is wrong but design is correct, such validation doesn't make any sense.

We use STAAD Pro and thinking to validate it. I just want to know how other engineers handle this?

Any help would be appreciated.

GD_P