Sparweb

Aerospace

- May 21, 2003

- 5,169

At work we have a library of reference data, which all of our 30+ engineers can access and refer to as they work.

It contains design manuals, regulatory guidance, vendor datasheets, industry standards, etc. The library has the following approximate dimensions:

120 GB, 170,000 files, 7200 folders

It grows significantly every year.

I have found that newer members of our engineering staff, not familiar with our knowledge base, have trouble using it.

For our younger engineers, it is a matter of believing the information exists and/or that we could possible know something that Google doesn't.

For our older engineers, like me, when things are stored on a path I wouldn't expect to follow, I have trouble finding other people's additions.

There was a time that Google Toolbar could be used to search and efficiently find information in the library, but GT has gone by the wayside.

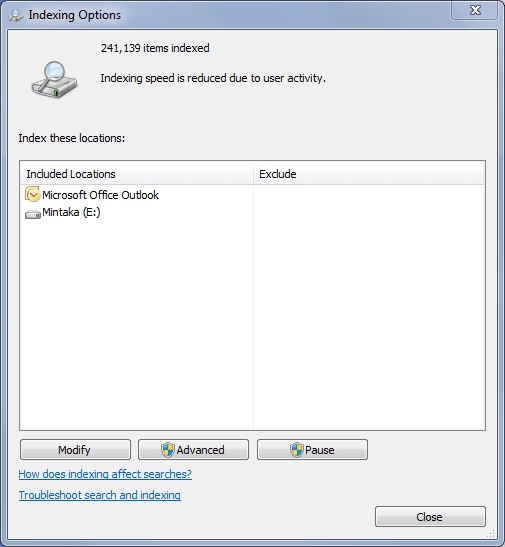

To find things now, we rely on a rough system of organization... and the horrible windows search bar above the folder viewer.

I've been looking at file search tools and found some candidates - but I thought I'd ask if Eng-Tips members have any recommendations before I commit?

"Copernic" and "UltraFileSearch" are the two contenders I like.

STF

It contains design manuals, regulatory guidance, vendor datasheets, industry standards, etc. The library has the following approximate dimensions:

120 GB, 170,000 files, 7200 folders

It grows significantly every year.

I have found that newer members of our engineering staff, not familiar with our knowledge base, have trouble using it.

For our younger engineers, it is a matter of believing the information exists and/or that we could possible know something that Google doesn't.

For our older engineers, like me, when things are stored on a path I wouldn't expect to follow, I have trouble finding other people's additions.

There was a time that Google Toolbar could be used to search and efficiently find information in the library, but GT has gone by the wayside.

To find things now, we rely on a rough system of organization... and the horrible windows search bar above the folder viewer.

I've been looking at file search tools and found some candidates - but I thought I'd ask if Eng-Tips members have any recommendations before I commit?

"Copernic" and "UltraFileSearch" are the two contenders I like.

STF

![[smile] [smile] [smile]](/data/assets/smilies/smile.gif) The search did take nearly 2 hours as I predicted.

The search did take nearly 2 hours as I predicted.