Jensdsds

Electrical

- Jun 9, 2021

- 33

Hello, i'm a student in electrical engineering and i'm doing a project using COMSOL multiphysiscs tool to anticipate the temperature of a cable being subjected to a specific current.

The test is done in real life and then simulated in COMSOL, to see if its possible to anticipate.

Currently we have some problems understanding the nature of the Heat transfer coefficient, in the Heat fLux module.

My simulation is tuned in so that it fits the cable dimensions etc. and the last parameter tuned was the Heat transfer coefficent[HTC].

This was set to 12 W/m^2*k. The first test was a copper cable which reached 32degC at 50Amps.

For my second test, i tested a aluminium cable which got a lot hotter than my copper cable due the the diffrent size.

At the higher temperatures the simulation became less accurate.

At temperatures around 32degC, the simulation became more accurate.

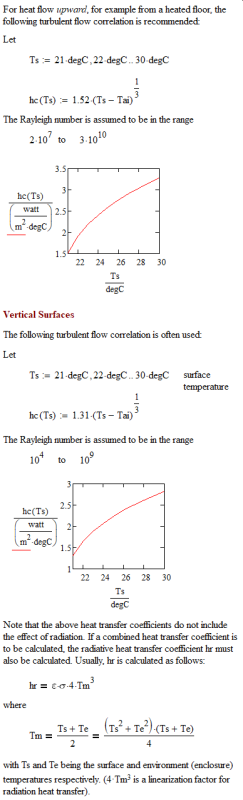

So from my standpoint is seems that as the deltaT between cable surface and ambient air becomes larger, the Heat transfer coefficient also becomes larger.

Is this correct to assume?

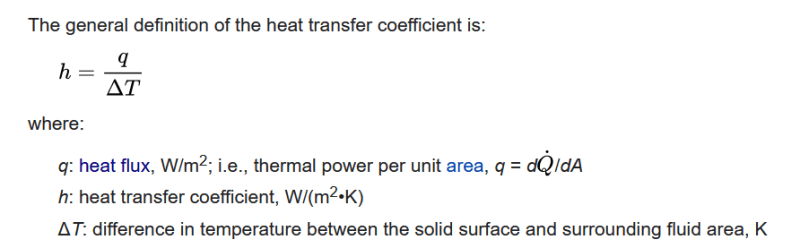

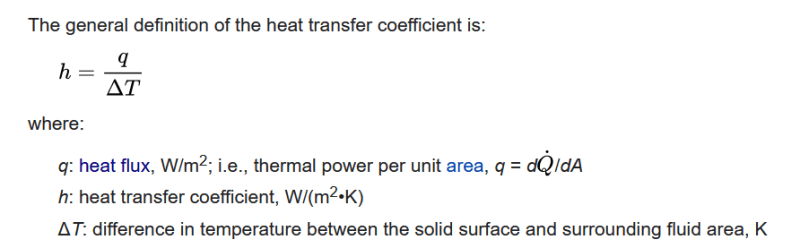

In this picture, the HTC is dependent on the deltaT and the heat flux.

The heat flux is almost constant, except for higher resistance at higher temperatures.

But if the deltaT rise, the HTC should become smaller? when looking at the formula.

So to sum up my question, is the HTC a constant value, or does it go up or down with a deltaT rise?

Thank you in advance, and pardon my bad spelling errors and thermodynamic newness.

Mvh. Jens

The test is done in real life and then simulated in COMSOL, to see if its possible to anticipate.

Currently we have some problems understanding the nature of the Heat transfer coefficient, in the Heat fLux module.

My simulation is tuned in so that it fits the cable dimensions etc. and the last parameter tuned was the Heat transfer coefficent[HTC].

This was set to 12 W/m^2*k. The first test was a copper cable which reached 32degC at 50Amps.

For my second test, i tested a aluminium cable which got a lot hotter than my copper cable due the the diffrent size.

At the higher temperatures the simulation became less accurate.

At temperatures around 32degC, the simulation became more accurate.

So from my standpoint is seems that as the deltaT between cable surface and ambient air becomes larger, the Heat transfer coefficient also becomes larger.

Is this correct to assume?

In this picture, the HTC is dependent on the deltaT and the heat flux.

The heat flux is almost constant, except for higher resistance at higher temperatures.

But if the deltaT rise, the HTC should become smaller? when looking at the formula.

So to sum up my question, is the HTC a constant value, or does it go up or down with a deltaT rise?

Thank you in advance, and pardon my bad spelling errors and thermodynamic newness.

Mvh. Jens