Ah yes, it’s that time again folks. A paper is released, in this case Lewis and Curry 2014, that says climate sensitivity is on the low end of the spectrum and the “skeptic” community starts banging pots and pans claiming the ACC theory is dead. Well, like most things in the field of climate science, it's not nearly that simple. Let's look at the entire story.

Equilibrium Climate Sensitivity (ECS) and Transient Climate Response (TCR)

Equilibrium Climate Sensitivity (ECS) – the amount the planet will warm in response to a doubling of atmospheric CO2 concentration (the base is usually taken from preindustrial levels of 280 ppm). ECS includes both fast and slow feedbacks, so ECS is not fully realized for decades or centuries after CO2 is held constant.

Transient Climate Response (TCR) – similar to ECS but only includes fast responding feedbacks. In other words, TCR is the temperature rise at the time atmospheric concentrations hit 2x the baseline, not where it will settle out to. As slow responding feedbacks tend to be positive, TCR is smaller than ECS.

These two are not the same and should not be confused. Many “skeptic” arguments prey on this confusion, be careful.

The Body of Knowledge on Climate Sensitivity

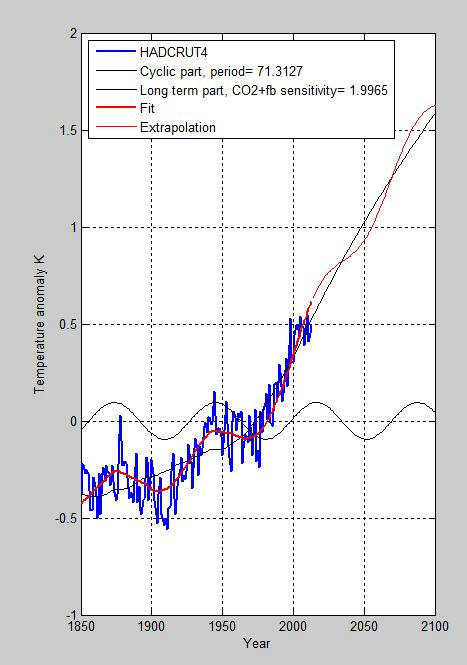

First, here’s a good list of the spectrum of peer reviewed literature addressing climate sensitivity. If you actually want to understand the topic (instead of cherry picking things that fit your viewpoint), it’s import to look at the body of work, that’s kinda how science works. Here’s a graphical representation, from AR5 WG1 Fig Box 12.2-1:

[image ]

To claim that a single paper can definitely set climate sensitivity, is false. While on the low side, Lewis and Curry 2014 does sit within the spectrum of other estimates.

Lewis and Curry 2014

Now to the paper itself. Lewis and Curry 2014 (LC14) is very similar to Otto et al 2013 (they both take the energy balance model approach), just with different heat uptake rates and reference periods.

LC14 has a heat uptake rate (0.36 Wm^-2) that is almost half of Otto et al 2013 (0.65 Wm^-2). The uptake rate used in LC14 comes from a single model, not an ensemble mean, and is, surprise, surprise, a very low value (which leads to lower ECS).

The ending reference period (1995-2011) was selected to “avoid major volcanic activity”. Although this seems odd considering Vernier et al. 2011 found that volcanic activity greatly affected the 2000’s. Furthermore, it is well known that the last decade has been a La Nina dominated period which would further add a cooling bias to their ending reference period, and thus artificially lower their ECS and TCR estimates.

Now new evidence (Durack et al 2014) suggests that “observed estimates of 0-700 dbar global warming since 1970 are likely biased low. This underestimation is attributed to poor sampling of the Southern Hemisphere”. Using the results of Durack et al 2014, the ECS would rise (15% according to a tweet from Gavin Schmidt).

The paper makes no mention of Cowtan & Way 2013 which demonstrates and corrects the cooling bias in HadCRUT caused by a lack of coverage in the heavily warming Arctic. Therefore, much of the recent warming which is occurring in the Arctic is unaccounted for in this paper. This would cause an artificially lower value of ECS and TCR.

The paper also ignores Shindell 2014 and Kummer & Dessler 2014 (most likely because they are too recent). Both of these papers highlight the inhomogeneities in aerosol forcing which may cause energy balance models to underestimate ECS and TCR.

Finally, the rather simplistic technique used in LC14 (and Otto et al 2013 as well) ignores all non-linearities in feedbacks and inhomogeneities in forcings. The exclusion of these elements leads to a lowering bias in TCR and ECS. Due to the fact the sample period and technique used introduce lowering biases into the results, LC14 may be useful in establishing the lower bound of sensitivity but in no way offers a conclusive value for the median or best estimate.

It should be noted that the results of Lewis and Curry 2104 implicitly accept and endorse the core of the Anthropogenic Climate Change theory; namely that increases in atmospheric CO2 will result in increases in global temperatures and that feedbacks will amplify the effect. For example, if you feel that the recent rise in global temperatures is due to land use changes and not CO2, then the TCR and ECS to a doubling of CO2 should be near zero. Or, if you feel that "it's the sun" and not CO2 then the TCR and ECS to a doubling of CO2 should be near zero. The recent change in climate is "just natural" and not CO2 you say? Well then TCR and ECS should, again, be near zero. So, if you've found yourself claiming any of the preceding and now find yourself trumpeting the results of LC14 as proof for your side, then you, unfortunately, are deeply confused. If you want to accept LC14's value for TCR of 1.33 K as THE value for TCR (which it isn't), then you also accept that majority of global warming is due to anthropogenic CO2 emissions.

What About Other Papers that Claim Lower Sensitivity?

As I stated from the outset, Lewis and Curry 2014 is hardly the only paper to address climate sensitivity. Beyond that, it’s hardly the only paper to suggest that climate sensitivity is on the lower end of the IPCC spectrum. I’ve addressed a few already but there are more (Lindzen 2001, Spencer & Braswell 2008, etc.). However, almost all of these papers have been found to have some significant flaws that cast doubt on their conclusions. Various peer reviewed rebuttals to these papers are listed below. I’d welcome readers to review the rebuttals and the original authors response to them.

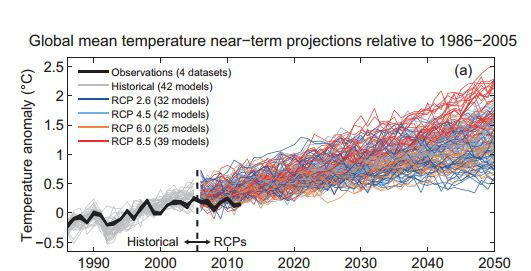

[image ]

...But What if Climate Sensitivity WAS Lower Than Expected

Let’s ignore all this for a second and pretend that, with Lewis and Curry, we can definitively say that climate sensitivity is lower than expected. Then what? Does this completely debunk the ACC theory? Does this mean rising CO2 levels really aren’t a concern? Well, many “skeptics” would say “YES!” but they do so without ever actually examining the issue.

According to Myles Allen, head of the Climate Dynamics group at Oxford:

The issue is that, with atmospheric CO2 levels rising as quickly as they are, a lower TCR does not mean anything significant. It just means that the effects will be delayed slightly. So even if “skeptics” were correct in saying that climate sensitivity is definitely at the lower end of the IPCC range (which they’re not), it would have no substantial impact on future global temperatures or the need to control CO2 emissions.

So, Lewis and Curry 2014 is:

1) Inconclusive to definitely say that climate sensitivity is on the low end of the IPCC spectrum

2) The results are suspect and appear to include numerous biases that would lead to lower TCR and ECS

3) Even if it were conclusive and accurate, it would still not suggest that reductions in CO2 emissions are unnecessary. In fact, it adds to the scientific body of knowledge that temperatures will continue to rise to unsafe levels if we continue with the status-quo, just maybe a decade later than other estimates.

(Note: I’ve started this new thread to discuss climate sensitivity specifically. It is an important topic that popped up in another thread and I felt it merited its own discussion. I would, as much as possible, like to keep the conversation on this subject…although this is likely wishful thinking)

Equilibrium Climate Sensitivity (ECS) and Transient Climate Response (TCR)

Equilibrium Climate Sensitivity (ECS) – the amount the planet will warm in response to a doubling of atmospheric CO2 concentration (the base is usually taken from preindustrial levels of 280 ppm). ECS includes both fast and slow feedbacks, so ECS is not fully realized for decades or centuries after CO2 is held constant.

Transient Climate Response (TCR) – similar to ECS but only includes fast responding feedbacks. In other words, TCR is the temperature rise at the time atmospheric concentrations hit 2x the baseline, not where it will settle out to. As slow responding feedbacks tend to be positive, TCR is smaller than ECS.

These two are not the same and should not be confused. Many “skeptic” arguments prey on this confusion, be careful.

The Body of Knowledge on Climate Sensitivity

First, here’s a good list of the spectrum of peer reviewed literature addressing climate sensitivity. If you actually want to understand the topic (instead of cherry picking things that fit your viewpoint), it’s import to look at the body of work, that’s kinda how science works. Here’s a graphical representation, from AR5 WG1 Fig Box 12.2-1:

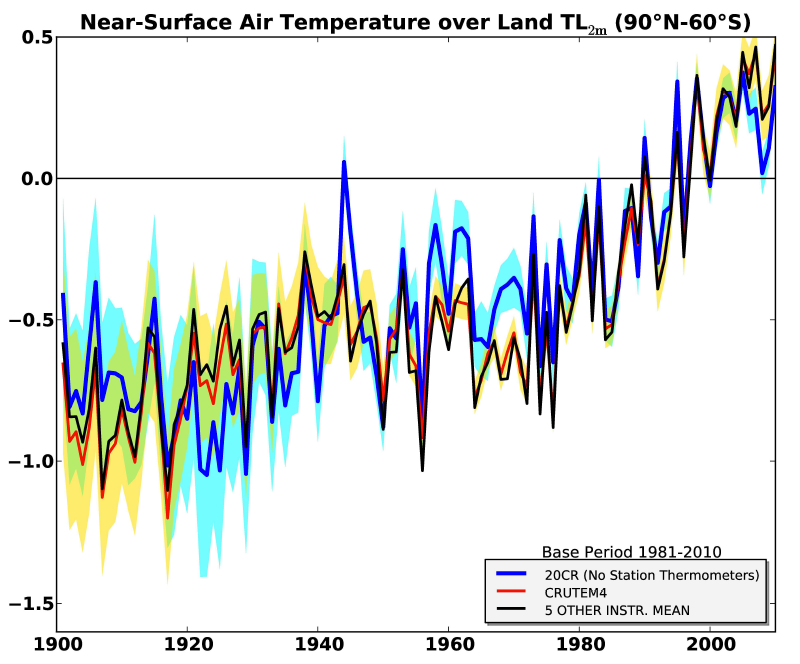

[image ]

To claim that a single paper can definitely set climate sensitivity, is false. While on the low side, Lewis and Curry 2014 does sit within the spectrum of other estimates.

Lewis and Curry 2014

Now to the paper itself. Lewis and Curry 2014 (LC14) is very similar to Otto et al 2013 (they both take the energy balance model approach), just with different heat uptake rates and reference periods.

LC14 has a heat uptake rate (0.36 Wm^-2) that is almost half of Otto et al 2013 (0.65 Wm^-2). The uptake rate used in LC14 comes from a single model, not an ensemble mean, and is, surprise, surprise, a very low value (which leads to lower ECS).

The ending reference period (1995-2011) was selected to “avoid major volcanic activity”. Although this seems odd considering Vernier et al. 2011 found that volcanic activity greatly affected the 2000’s. Furthermore, it is well known that the last decade has been a La Nina dominated period which would further add a cooling bias to their ending reference period, and thus artificially lower their ECS and TCR estimates.

Now new evidence (Durack et al 2014) suggests that “observed estimates of 0-700 dbar global warming since 1970 are likely biased low. This underestimation is attributed to poor sampling of the Southern Hemisphere”. Using the results of Durack et al 2014, the ECS would rise (15% according to a tweet from Gavin Schmidt).

The paper makes no mention of Cowtan & Way 2013 which demonstrates and corrects the cooling bias in HadCRUT caused by a lack of coverage in the heavily warming Arctic. Therefore, much of the recent warming which is occurring in the Arctic is unaccounted for in this paper. This would cause an artificially lower value of ECS and TCR.

The paper also ignores Shindell 2014 and Kummer & Dessler 2014 (most likely because they are too recent). Both of these papers highlight the inhomogeneities in aerosol forcing which may cause energy balance models to underestimate ECS and TCR.

Finally, the rather simplistic technique used in LC14 (and Otto et al 2013 as well) ignores all non-linearities in feedbacks and inhomogeneities in forcings. The exclusion of these elements leads to a lowering bias in TCR and ECS. Due to the fact the sample period and technique used introduce lowering biases into the results, LC14 may be useful in establishing the lower bound of sensitivity but in no way offers a conclusive value for the median or best estimate.

It should be noted that the results of Lewis and Curry 2104 implicitly accept and endorse the core of the Anthropogenic Climate Change theory; namely that increases in atmospheric CO2 will result in increases in global temperatures and that feedbacks will amplify the effect. For example, if you feel that the recent rise in global temperatures is due to land use changes and not CO2, then the TCR and ECS to a doubling of CO2 should be near zero. Or, if you feel that "it's the sun" and not CO2 then the TCR and ECS to a doubling of CO2 should be near zero. The recent change in climate is "just natural" and not CO2 you say? Well then TCR and ECS should, again, be near zero. So, if you've found yourself claiming any of the preceding and now find yourself trumpeting the results of LC14 as proof for your side, then you, unfortunately, are deeply confused. If you want to accept LC14's value for TCR of 1.33 K as THE value for TCR (which it isn't), then you also accept that majority of global warming is due to anthropogenic CO2 emissions.

What About Other Papers that Claim Lower Sensitivity?

As I stated from the outset, Lewis and Curry 2014 is hardly the only paper to address climate sensitivity. Beyond that, it’s hardly the only paper to suggest that climate sensitivity is on the lower end of the IPCC spectrum. I’ve addressed a few already but there are more (Lindzen 2001, Spencer & Braswell 2008, etc.). However, almost all of these papers have been found to have some significant flaws that cast doubt on their conclusions. Various peer reviewed rebuttals to these papers are listed below. I’d welcome readers to review the rebuttals and the original authors response to them.

[image ]

...But What if Climate Sensitivity WAS Lower Than Expected

Let’s ignore all this for a second and pretend that, with Lewis and Curry, we can definitively say that climate sensitivity is lower than expected. Then what? Does this completely debunk the ACC theory? Does this mean rising CO2 levels really aren’t a concern? Well, many “skeptics” would say “YES!” but they do so without ever actually examining the issue.

According to Myles Allen, head of the Climate Dynamics group at Oxford:

.Myles Allen said:A 25 per cent reduction in TCR would mean the changes we expect between now and 2050 might take until early 2060s instead…So, even if correct, it is hardly a game-changer…any revision in the lower bound on climate sensitivity does not affect the urgency of mitigation

The issue is that, with atmospheric CO2 levels rising as quickly as they are, a lower TCR does not mean anything significant. It just means that the effects will be delayed slightly. So even if “skeptics” were correct in saying that climate sensitivity is definitely at the lower end of the IPCC range (which they’re not), it would have no substantial impact on future global temperatures or the need to control CO2 emissions.

So, Lewis and Curry 2014 is:

1) Inconclusive to definitely say that climate sensitivity is on the low end of the IPCC spectrum

2) The results are suspect and appear to include numerous biases that would lead to lower TCR and ECS

3) Even if it were conclusive and accurate, it would still not suggest that reductions in CO2 emissions are unnecessary. In fact, it adds to the scientific body of knowledge that temperatures will continue to rise to unsafe levels if we continue with the status-quo, just maybe a decade later than other estimates.

(Note: I’ve started this new thread to discuss climate sensitivity specifically. It is an important topic that popped up in another thread and I felt it merited its own discussion. I would, as much as possible, like to keep the conversation on this subject…although this is likely wishful thinking)