I'm pretty sure that this topic has been discussed again and again within this forum, but I've been through several related threads (probably 10+) and still haven't found the answer to my question. Short of browsing through all of the threads in this forum, I have decided to start one and ask here.

I come from a metric background. Designing in US customary inch fractions, while dimensioning in decimals is making me wonder about certain things.

If say, I have a part that is 0.5" and I would like a tolerance of +/-0.01", it is not an issue. I can just label 0.50" +/-0.01". During inspection of the part, QA will just have to check up to the 3rd decimal if 1/10th rule of thumb is used for measurement tool accuracy.

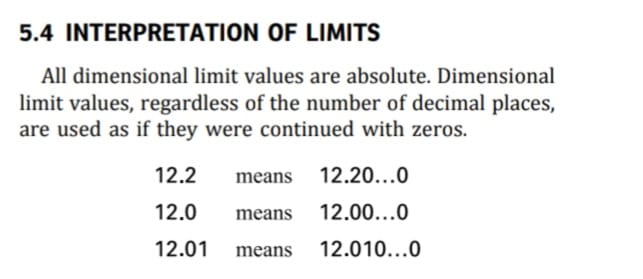

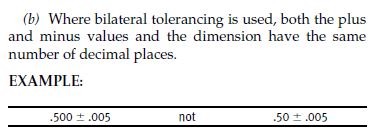

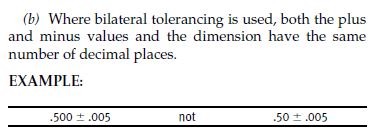

If I have a part that is 0.3125" and I would like to maintain the tolerance of +/-0.01", it is also not an issue within the drawing. I can just label 0.3125" +/-0.0100". Trailing zeroes on the tolerance as per ASME Y14.5 2009 2.3.2(b) - Bilateral Tolerancing.

However, when it comes to inspection of the part:

1. Will the 1/10th rule of thumb apply to the total value of the tolerance (i.e. 10% of 0.02" = 0.002"); meaning that the measurement system only needs to be accurate up to the third decimal, or

2. Would the trailing zeroes/number of decimals be overriding it (i.e. the measurement system will have to be able to measure up to the fifth decimal)?

Worse if I have a dimension which is from the 32th fraction, for example, 1.40625". Following ASME Y14.5 rules, I suppose the tolerance would be written as +/-0.01000". What about the inspection then? Six-decimal accuracy on the measuring system?

Drawing-wise, I can just label it as per the actual 3D dimension and the ASME rules just to be true to the part and the rules. However, I would think that this will have huge implication on manufacturing and inspection cost.

I'm sure that many of the forumers would have encountered this in real life. I would appreciate to hear on how this is case is being handled.

------------------------------------

Also on another related topic:

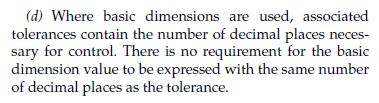

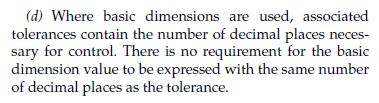

With regards to the snapshot below, would it also be acceptable for the other way around? I suppose the implication would be similar to my question above.

1. Basic Dimension: 1.625"

2. Positional Tolerance: Diameter 0.02" (no trailing zeroes to match the Basic Dimension)

I come from a metric background. Designing in US customary inch fractions, while dimensioning in decimals is making me wonder about certain things.

If say, I have a part that is 0.5" and I would like a tolerance of +/-0.01", it is not an issue. I can just label 0.50" +/-0.01". During inspection of the part, QA will just have to check up to the 3rd decimal if 1/10th rule of thumb is used for measurement tool accuracy.

If I have a part that is 0.3125" and I would like to maintain the tolerance of +/-0.01", it is also not an issue within the drawing. I can just label 0.3125" +/-0.0100". Trailing zeroes on the tolerance as per ASME Y14.5 2009 2.3.2(b) - Bilateral Tolerancing.

However, when it comes to inspection of the part:

1. Will the 1/10th rule of thumb apply to the total value of the tolerance (i.e. 10% of 0.02" = 0.002"); meaning that the measurement system only needs to be accurate up to the third decimal, or

2. Would the trailing zeroes/number of decimals be overriding it (i.e. the measurement system will have to be able to measure up to the fifth decimal)?

Worse if I have a dimension which is from the 32th fraction, for example, 1.40625". Following ASME Y14.5 rules, I suppose the tolerance would be written as +/-0.01000". What about the inspection then? Six-decimal accuracy on the measuring system?

Drawing-wise, I can just label it as per the actual 3D dimension and the ASME rules just to be true to the part and the rules. However, I would think that this will have huge implication on manufacturing and inspection cost.

I'm sure that many of the forumers would have encountered this in real life. I would appreciate to hear on how this is case is being handled.

------------------------------------

Also on another related topic:

With regards to the snapshot below, would it also be acceptable for the other way around? I suppose the implication would be similar to my question above.

1. Basic Dimension: 1.625"

2. Positional Tolerance: Diameter 0.02" (no trailing zeroes to match the Basic Dimension)

![[upsidedown] [upsidedown] [upsidedown]](/data/assets/smilies/upsidedown.gif)